Paper reading - ADADELTA AN ADAPTIVE LEARNING RATE METHOD

This paper was done by Matthew D. Zeiler while he was an intern at Google.

Introduction

The aim of many machine learning methods is to update a set of parameters $x$ in order to optimize an objective function $f(x)$. This often involves some iterative procedure which applies changes to the parameters, $\Delta{x}$ at each iteration of the algorithm. Denoting the parameters at the t-th iteration as $x_t$, this simple update rule becomes:

- $g_t$ is the gradient of the parameters at the t-th iteration

- $η$ is a learning rate which controls how large of a step to take in the direction of the negative gradient

Purpose

The idea presented in this paper was derived from ADAGRAD in order to improve upon the two main drawbacks of the method:

- the continual decay of learning rates throughout training

- the need for a manually selected global learning rate.

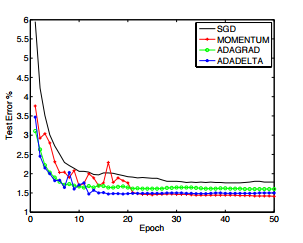

SGD vs ADAGRAD vs ADADELTA

- SGD:

- where $\rho$ is a constant controlling the decay of the previous parameter updates

- ADAGRAD:

- ADADELTA:

- where a constant $\epsilon$ is added to better condition the denominator

- where $E[g^2]_t$ is expected value of gradient with power 2 at time t

Result

Compared with SGD, ADAGRAD and MOMENTUM, normally ADADELTA has a convergence faster and has lower error rate.

Personal Thought

Have tried ADADELTA and SGD. Although for each epoch ADADELTA takes longer time to compute, we just have to input (default value) $\rho = 0.95$ and $\epsilon = 1e^{-6}$ then it will learn very well. If use SGD, we have to fine tune the learning rate and the error rate is often bigger than ADADELTA.